Multi-Framework Coherence Checks

Continuous meaning preservation for complex systems

Complex systems rarely fail all at once.

They fail when meanings drift apart across models that still appear to be working.

Multi-Framework Coherence Checks

Think of the 737 MAX MCAS failures—

sensor data versus pilot control frameworks.

The 2008 financial crisis—

AAA ratings versus actual risk frameworks.

Or COVID-era policy breakdowns—

epidemiological versus economic models.

These were not simple bugs.

They were not data errors.

They were coherence failures.

Multi-Framework Coherence Checks detect these failures early—

before decisions break,

before trust erodes,

before repair becomes impossible.

The Problem

Modern systems span multiple frameworks at once:

-

Statistical models

-

Physical simulations

-

Machine learning systems

-

Human decision layers

Each framework can be internally valid

while collectively incoherent.

Outputs remain within bounds.

Dashboards stay green.

Meaning quietly diverges.

Our Solution

Multi-Framework Coherence Checks provide continuous verification that shared concepts still mean the same thing across systems.

We do not ask whether results look plausible.

We ask whether reasoning remains aligned.

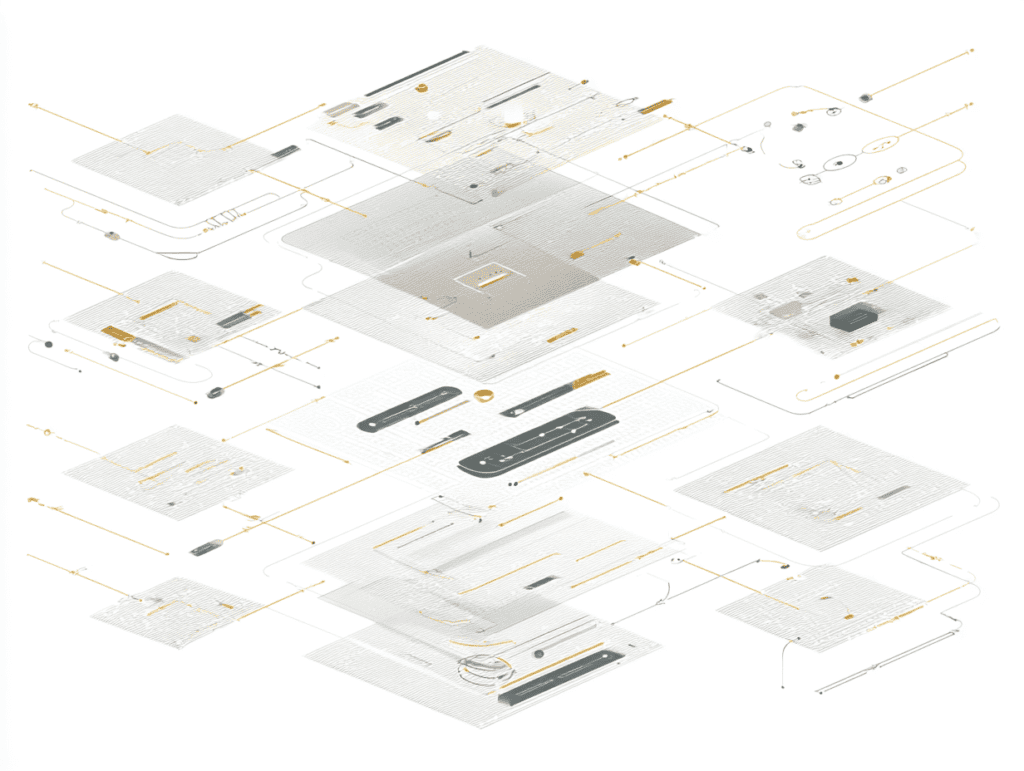

How It Works

The Coherence Engine

At every point where frameworks interact, we apply a stable test:

-

Which frameworks are active

-

Which concepts are shared or exchanged

-

What invariant operational support defines each concept

-

Whether those supports still align

If meaning aligns, coherence is preserved.

If meaning diverges, the inconsistency is flagged immediately.

Think of it as conceptual version control.

We track the definitional branch of every critical concept.

If risk in Framework B diverges from risk in Framework A, you receive a merge-conflict alert—

before the divergence is embedded in decisions.

Example

A Coherence Failure in Practice

An AI-driven system combines:

-

A physics-based simulation

-

A data-driven prediction model

-

A downstream optimization engine

All three reference risk.

Each defines it differently.

Without coherence checks, the system produces confident but unstable decisions.

With coherence checks, divergence is detected early—

before outputs are trusted,

before deployment,

before scale.

What You Get

-

A Conceptual Integrity Dashboard showing stable and drifting concepts

-

Pre-failure alerts when meaning diverges

-

A live map of conceptual dependencies across frameworks

-

Clear identification of incompatible assumptions

-

Options for alignment, scoping, or enforced separation

Coherence is not assumed.

It is continuously verified.

When You Need This

Use Multi-Framework Coherence Checks when:

-

Multiple models feed a single decision process

-

Systems combine symbolic, statistical, and simulation-based reasoning

-

Outputs persist across time, scale, or abstraction

-

Results look correct but feel brittle or unstable

If frameworks interact continuously, coherence must be maintained continuously.

Integration and Overhead

Coherence Checks are non-invasive.

We instrument framework interactions through APIs, logs, and model metadata—

not by rewriting systems.

The checks run as a parallel verification layer.

Measured in milliseconds.

Not months.

Why Clarus

Traditional monitoring checks whether outputs stay within bounds.

We check whether the meanings behind those outputs are still aligned.

A system can be statistically correct

and conceptually broken.

Clarus detects that break while correction is still possible.

The Outcome

Your systems remain aligned.

Your decisions remain interpretable.

Your reasoning remains valid as complexity scales.

Multi-Framework Coherence Checks do not slow systems down.

They prevent systems from drifting apart.